🦈 Sharkticon

12 Jun 2017 … Attention Is All You Need 🔎

On June 12, 2017, a research paper came out that was to be a game-breaker in the field of AI, Attention Is All You Need which introduced the Transformers architecture, destined to replace RNNs (sequential models), particularly in language at first, transformers were soon to become the State-Of-The-Art no matter the task as ViT was to demonstrate shortly afterwards in terms of Computer Vision.

SmartShark … The Little Brother 👶

Meanwhile, at PoC, the first student innovation center, a project was being developed: SmartShark an cyber attack detection system using Deep Learning models and in particular the LSTM architecture (specific case of RNNs) for this purpose.

This small project could detect simple DDoS or Man-In-The-Middle attacks, a promising PoC but still far from blocking all types of attack.

Sharkticon, kicking into high gear 🦈

Now, if we make the connection between the Transformers and SmartShark, which detects cyber attacks with LSTMs, why not implement Transformers to detect cyber attacks?

That’s the whole idea behind Sharkticon, which will be based on an anomaly detection system rather than an attack pattern learning system.

In other words, instead of learning what an attack is, it will be taught what a healthy network is, so that anything different will be considered an alert.

This method, combined with Transformers, could revolutionize network intrusion detection!

Technically speaking, how does it work? 🛠️

Well, the aim of this little blog post isn’t to get too technical: if you want maximum detail, I suggest you look directly at github.

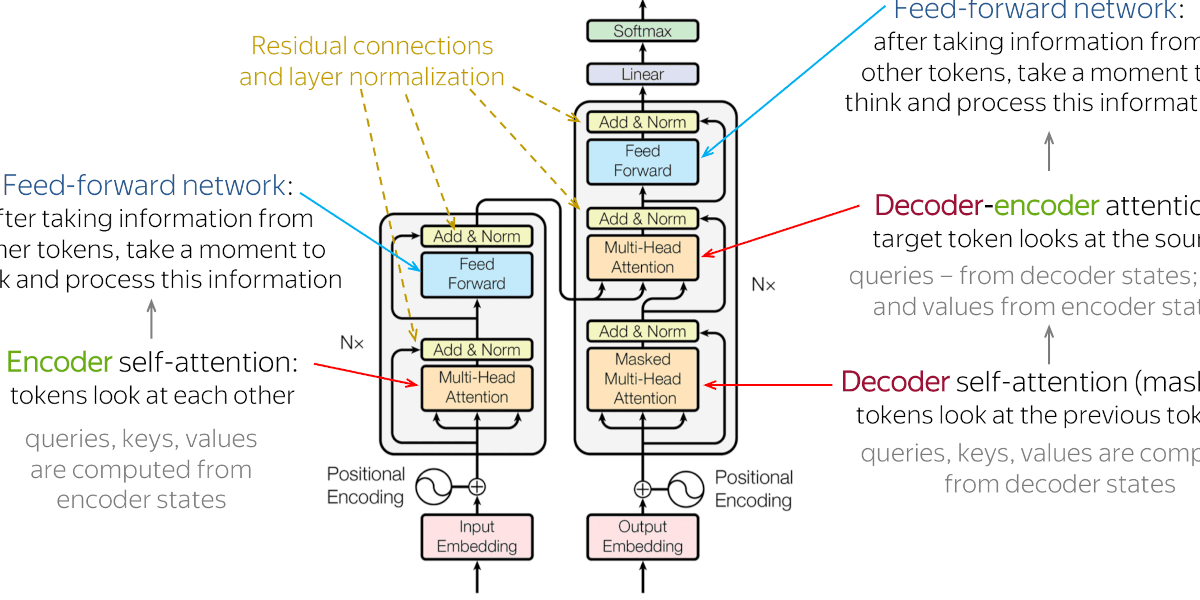

However, to put it plainly, Transformers' attention system will enable you to understand the context of a sentence, and this is the big novelty.

Previously, in RNNs, we looked at what was there before, but not necessarily what might be there after. Transformers with a system of queries, keys and values will retain the entire context of the information.

In the context of our project, this means understanding the whole network, not just the 10 or 20 packets before it.

In terms of code, we implemented a transformers from scratch in Python with the Tensorflow library and used the Packet2Vec algorithm (a variant of Word2Vec) to vectorize packets and apply arithmetic operations on them.

Our transformer predicts the network at t+1, then compares this prediction via an anomaly detection algorithm to decide whether an alert has been raised.

Conclusion of the project 🏁

It was certainly the hardest project I’ve ever undertaken, starting from scratch and rebuilding a very recent and complex architecture at the time, when I was in my first year studying computer science.

However, we got some results, and even if implementing it from scratch wasn’t the best decision in terms of performance, it enabled me to master this architecture.

I was able to present my project at AI meet-ups, conferences and trade fairs… the project ends as a success, but not production ready